Crossing the Street

Even if you may not be aware of it, a lot of non-verbal communication takes place when you walk down the street. You observe the behavior of cars, you make eye contact with the driver when you want to cross, or you use hand gestures to communicate intend. Let's take a typical road crossing as an example.

When pedestrians want to cross a street and a car is coming, they typically follow the same pattern. If the car is far away, pedestrians first check the environment or the road space ahead of the car. As the car comes closer, the gaze gradually shifts to the windshield of the car. When the car does not display the expected behavior, pedestrians move their gaze up to eventually make eye contact with the driver. In fact, in certain scenarios, 84% of pedestrians make eye contact with the driver.

A pedestrian crossing is just one of many examples of the importance of non-verbal communication in traffic. But for autonomous cars, is it necessary to substitute this behavior?

The Case for eHMIs

In theory, if an autonomous car follows the expected behavior there is no need for this communication. In practice, although there is some doubt, most experiments with autonomous cars have shown the need to substitute the non-verbal communication between autonomous cars and other road users. In these experiments, often done in simulators or as wizard-of-oz tests, confusing or conflicting interactions occur between autonomous cars and pedestrians if there is no communication.

It is in the best interest of car companies that their autonomous vehicles prevent this for the sake of safety, but also to improve traffic flow. This means creating some form of an interface on the outside of the car. This external human-machine interface, or eHMI, can take many forms, from a simple light or audible tone, to elaborate displays.

The most important problem to solve when designing this eHMI is what information it should communicate.

What Information to Communicate?

Answering this question is probably the hardest part of designing an eHMI. It depends on many things. From current research, there seem to be three main focus areas: driving mode, intent, and perception.

Driving Mode

A world where full level 5 autonomous cars zip around town is likely far away. Until then, autonomous cars will be a mix of human and computer-controlled. It is important to communicate with other road users who is driving the car, the human, or the computer. There is a general consensus that communicating driving mode is essential for the trust and safety of other road users.

Intent

Let's go back to the example of the pedestrian crossing. If an oncoming car is not showing the desired behavior, the pedestrian will try to make eye contact with the driver to understand his intent. This simple interaction is enough to communicate that a driver has seen the pedestrian and will slow down or not. Similar to driving mode, the research for intent is also largely conclusive about the need to communicate it. However, there is some nuance in how to communicate intent. Should the car be specific and communicate that it is yielding for a pedestrian or is it enough to show that the car has detected the pedestrian?

In all cases, it is clear that the eHMI should avoid communicating explicit instructions to road users. First, because some actions like the way pedestrians cross the street are highly individual. But also because circumstances may warrant different actions appropriate for different pedestrians interacting with the vehicle at the same time. Furthermore, there is the question of liability. If an autonomous car gives explicit instruction to a pedestrian and an accident occurs, is the car to blame?

Perception

Communicating intent is targeted at direct stakeholders. The car can go one step further and communicate the objects that it detects, even if no direct interaction between them takes place. For example, the car could highlight that it has detected pedestrians or cyclists when driving by them. Compared to driving mode and intent, it is less clear whether this has a noticeable benefit. Most experiments come to the same conclusion: it is a nice-to-have but not essential for safe and efficient interactions.

There is still a lot of research that needs to be done to exactly figure out what to communicate in different scenarios. It is clear though that driving mode and intent are two crucial areas to investigate further. The logical next question to answer is how an eHMI should communicate these two areas?

How to Communicate

Context

Up until now, we have assumed the target group to be homogeneous. In reality, though, it is probably the most diverse group you could design for. A pedestrian could really be anyone. And the way a child interprets information from an eHMI is different from the elderly. Similarly, we have to take into account different transportation modes, cultural differences, people with disabilities, and differences in infrastructure and traffic law.

Therefore, the interface must be easy to understand, unambiguous and perceptible under various environmental conditions without being distracting. It should be scalable to support communication with multiple road users simultaneously. Furthermore, it should establish the right amount of trust such that road users don't overtrust it or the other way around.

It is also important to iterate again that the purpose of an eHMI is to facilitate communication in case the expected behavior of the car is not in line with expectations. So before developing an eHMI, the implicit behavior of the car should be optimized.

Design

When it comes to research on how to design the eHMI, we run into some limitations. Today, there are not many controlled studies on eHMI design. Most of the experiments focus on visual communication but they come with limitations.

For example, there are many promising results from experiments using text to indicate driving mode and intent. It seems to have the lowest learning curve and the least ambiguous. But by relying on just text, it excludes large groups like children, the visually impaired, or people who speak a different language.

Instead of, or in addition to text, an eHMI could use lights and symbols. There is actually some concrete research that can help. For example, when it comes to the communication mode, it seems that flashing lights have better results than more subtle or horizontal sweeping animations. The same goes for intent. Pedestrians cross sooner with flashing signal compared to a sweeping animation.

There is also a surprising amount of good research about the color of the lights. Let's say we want to use a light bar to show which driving mode the car is in. We will run into many restrictions for the color. For example, we can't use blue, red, or orange because, in many countries, it is reserved for emergency services. There are actually quite a few experiments exploring color for eHMIs. They all conclude that Turquoise or cyan is the color that results in the least misunderstanding.

The interface should be placed somewhere on the car where it is most visible. One experiment shows that averaged over the period when the car approached and slowed down, the roof, windscreen, and grill eHMIs yields the best performance. Furthermore, it seems that pedestrians have a right bias so placing it on the right side of the windshield could be the best place. Although, in practice it may be more complex than that, especially considering the difference in infrastructure design and driving side between countries. Mercedes actually has some public research that concluded that the signals should be placed all around the car so that it is visible from each angle.

From this research, there is not much concrete information about the design of the eHMI. What we can conclude is that a safe, effective eHMI relies on multiple modalities. Most of the limited research today is focused on visual communication. When evaluating those experiments, it seems that some form of cyan flashing lights or symbols placed around the car yields the best results. This could be paired with text but that comes with limitations. Aside from visual communication, there is a whole range of modalities to try out on which there is not much research yet. Think of sound design or implicit behavior like biomimicry. Car companies have already been researching eHMIs and often don't publish their results publicly, but we can have a look at some concept cars and see how they have tackled this problem.

Examples From the Car Industry

Nissan IDS Concept

This concept from Nissan communicates drive mode via a light strip running across the car. Like most concepts, this light band is cyan. It also has an LED screen that appears in fully autonomous mode. It displays short instructions and other personal messages in Japanese and English. In that sense, it is not exactly in line with research as it relies on text-only messages.

Volvo Concept 360

The Volvo 360c is interesting because it is a fully autonomous concept that has a minimal approach to the eHMI. The car has a 360° light strip that is used to communicate intent and perception. This concept is one of the few incorporating perception so it would be interesting to know what led to this decision. The light bar is in line with the research of Mercedes highlighting that the eHMI should be visible from each angle. Interestingly, it also uses sound to communicate intent and perception but it is hard to find exactly how this is designed.

Smart EQ ForTwo Concept

This futuristic concept by Smart uses a complex colored display to communicate drive mode and intent. Its display can show a wide variety of UI elements and even goes as far as showing images. It is unclear exactly how it works but it can show information like the street it is currently on. It is also used to communicate intent in which case the display shows both symbols and text.

Jaguar Land Rover Virtual Eyes Concept

This interesting concept by Jaguar Land Rover experiments with using human-like features to communicate. The vehicle uses a pair of fake eyes to make eye contact with pedestrians. I haven't found any results of their experiments but by using these 'natural' features, it may be more intuitive for pedestrians than displays with more abstract information.

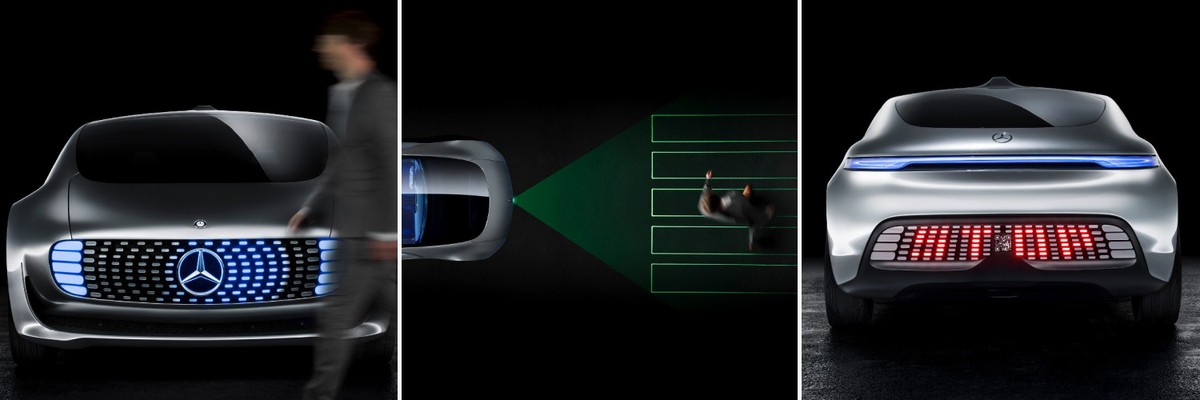

Mercedes-Benz F015 Concept

The Mercedes F015 Concept is probably the most elaborate. It uses an LED matrix in the front and back to communicate perception and intent via text and lights. On top of that, it has a laser projection system that can display information on the street, such as a zebra crossing for pedestrians. It is probably the most thought-through concept that exists today. The example below shows an example of a pedestrian crossing. It uses multiple modalities to communicate with the pedestrian.

The car uses its laser projection system to display a zebra crossing. Its LED matrix highlights the pedestrian and makes a wave or crossing animation. It also uses a voice command to tell the pedestrian it is safe to cross. Clearly, Mercedes is confident that its car can give these explicit instructions and that no accidents will occur.

Human Horizons HiPhi Concept

This concept from Human Horizons, a Chinese startup, is actually close to production so it may be the first production car with an eHMI. It is quite similar to the Mercedes concept. It uses LED matrix displays in the front and back to display symbols and animations. It also has a similar laser projector that can display information on the ground.

The car industry is moving at a rapid pace and some early eHMI concepts may enter production soon. One crucial point which I haven't mentioned yet is standardization. Just as with other traffic safety systems like traffic lights and turn signals, it is beneficial to introduce standards and legislation around eHMIs. It would be unproductive and unsafe if each brand comes up with its own set of interactions.

Autonomous cars will likely need an external interface to substitute non-verbal communication with other road users. The research from both the academic world and the car industry that exists today is only the tip of the iceberg. With some confidence, we can say that the focus should be on communicating the driving mode and intent of the car. But how to do that is still open, and there is still so much to explore.

Get notified of new posts

Don't worry, I won't spam you with anything else