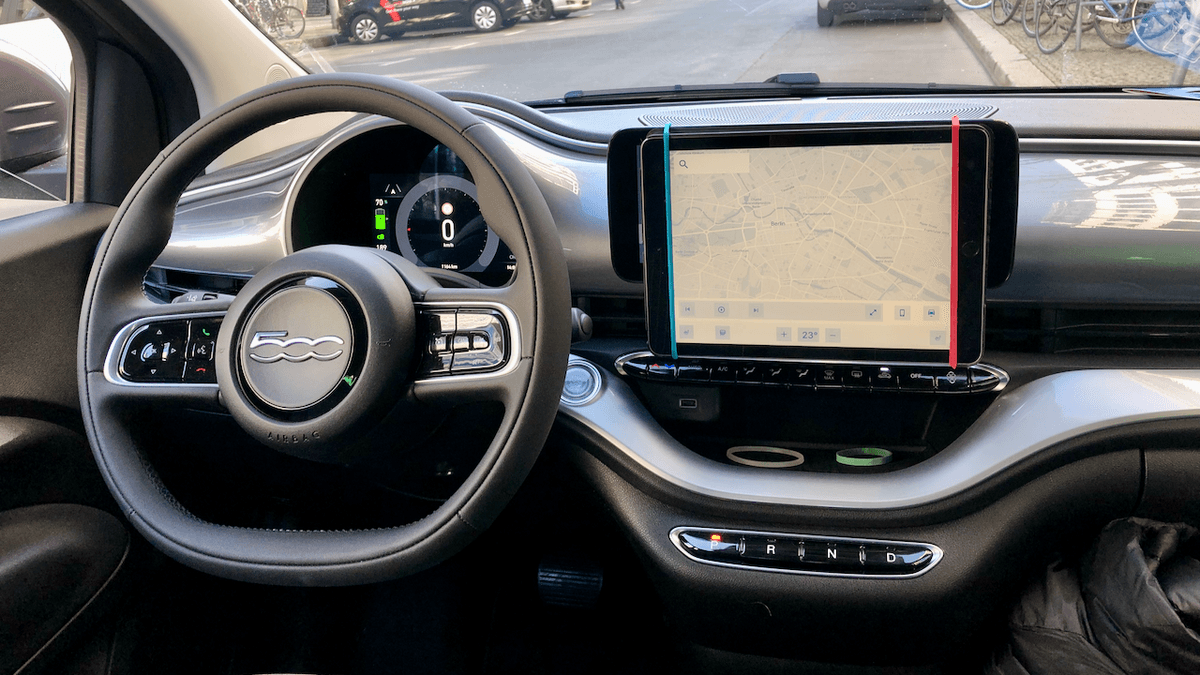

My dream scenario is one where I can send my prototypes remotely to my car's infotainment system and try them while driving. Unfortunately, there are several technical and legal restrictions to this. My go-to method so far has been to mount a tablet running a Figma prototype to the dashboard.

This gives a good insight into the position of the interface in the interior, though the real value comes from driving and trying the prototype at the same time. Given that I don't have a test track in my backyard, I can only ask a colleague to drive while I play with the prototype from the passenger seat. Unfortunately, it is not possible to do this multiple times per week because it costs too much time and energy.

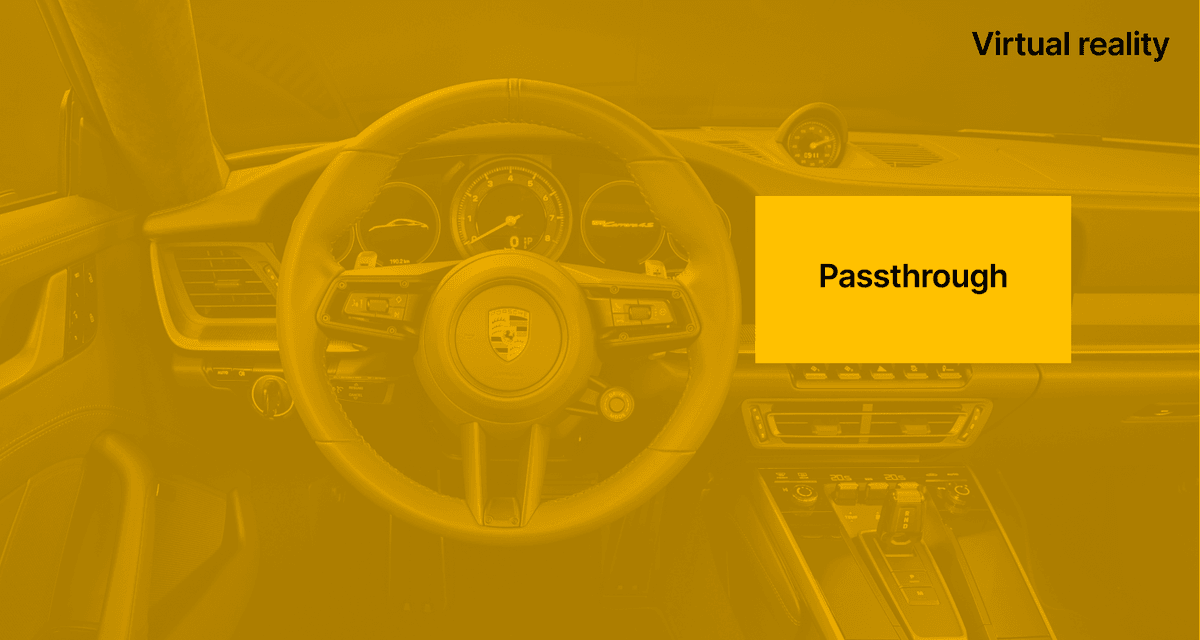

Virtual reality is often mentioned as an alternative and has been used in car design for several years. But it isn't good enough for UX design. Many VR prototyping tools don't work well because they require experience with Unity or other 3D software, which many digital product designers lack. It also takes too much time to design something in Figma, export it to a prototype tool, and then to Unity.

However, the most important reason it doesn't work well is that it’s not good at simulating real-world interaction. It's hard to judge an interface's usability when the text is hard to read due to the low resolution of the headset, and your fingers go straight through the buttons you want to press.

For this to work, it must be possible to touch an actual display. Additionally, the barriers to testing an interface must be as low possible in terms of the required skill level and energy.

The Idea

Since studying human-computer interaction at university, I've been excited about mixed reality and exploring different forms of interaction with it. When Meta announced the Quest 3, the first affordable mixed-reality headset, I had to get one. After playing with it, I realized it has all the components to create a better testing experience.

Instead of trying to get a prototype working inside a virtual environment, I can leave that part to the real world and use a tablet.

The only thing a designer would need to test an interface is access to a gaming wheel. Then, all it takes is to load the prototype on a tablet and put on a Quest. I gave myself a weekend to try this to check if it would work.

Day 1: First Version

I first familiarized myself with the Quest and created a basic scene in Unity. Since it was my first time creating something for Quest, I spent quite some time setting up my environment in Unity. The process and documentation for the Quest is more difficult compared to the Apple Vision Pro. It is not technically complex, but it did take me a couple of hours to find my way through the many pages of documentation and set up a Unity environment for VR on my Quest. Once that was working, adding a free car and road asset was straightforward.

What wasn't straightforward was figuring out how to create the 'passthrough', the area that would show the tablet in the 'real' world. The Meta documentation and samples are complex, but I found a YouTube tutorial that helped me set up a basic passthrough object.

With that setup, I could put on my Quest, calibrate the camera and passthrough, and that was it!

After playing around in this environment, I found it promising. Interacting with a physical prototype is much better than a virtual one. It’s surprising how much the tablet appears to be part of the vehicle if it is in the correct location. The one thing I did notice right away is that the resolution of the Quest cameras is not high enough to read small text easily. As it was getting dark outside at that point, the low light may have impacted this.

From this, I wanted to improve three things:

- Test the camera feed in better light.

- Create a more realistic Unity scene

- Use my gaming wheel as the input device

Day 2: Turning It Into a Simulator

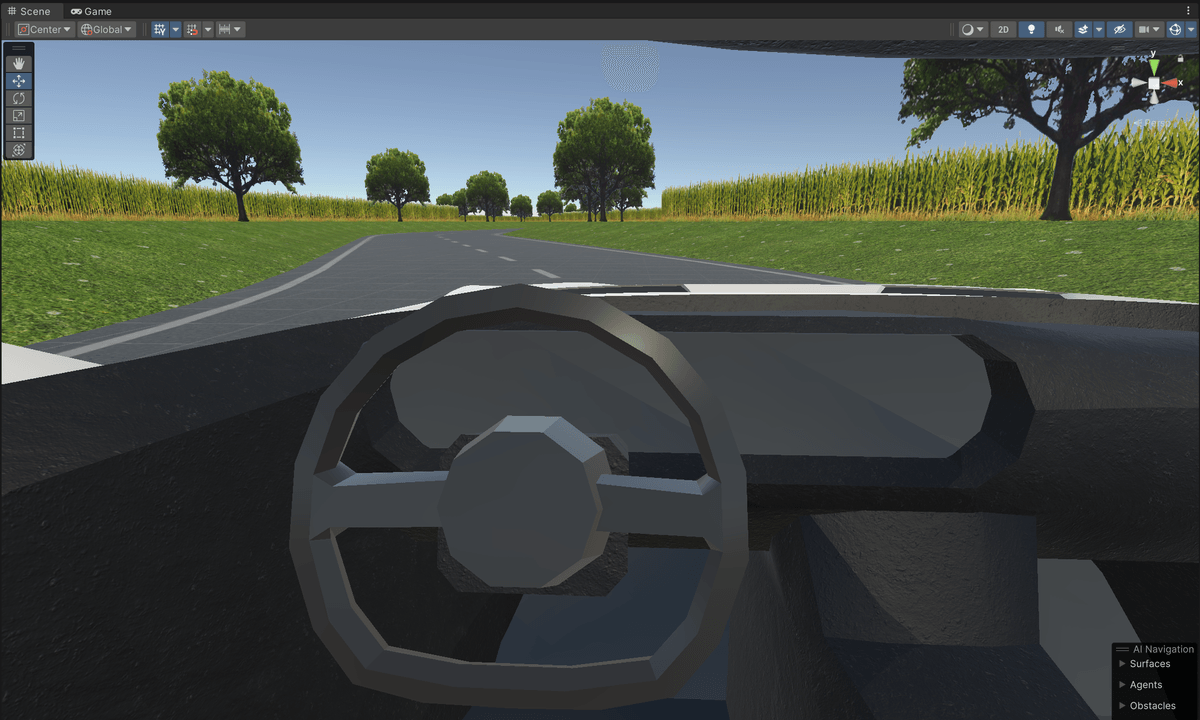

In the Unity asset store, I found the free Vehicle Physics Pro asset, which was the perfect low-effort, high-return package I needed. It includes a city environment, a pick-up truck model, and basic driving physics.

Next, I connected my gaming wheel, which was quite easy in Unity. I simply changed the input method from keyboard to joystick and adjusted the controls. I then spent some time fine-tuning the passthrough object, lighting, and wheel physics. The most annoying part was calibrating everything. Hopping from my desk to the simulator, putting on and off the headset, and getting the positions right was quite a workout.

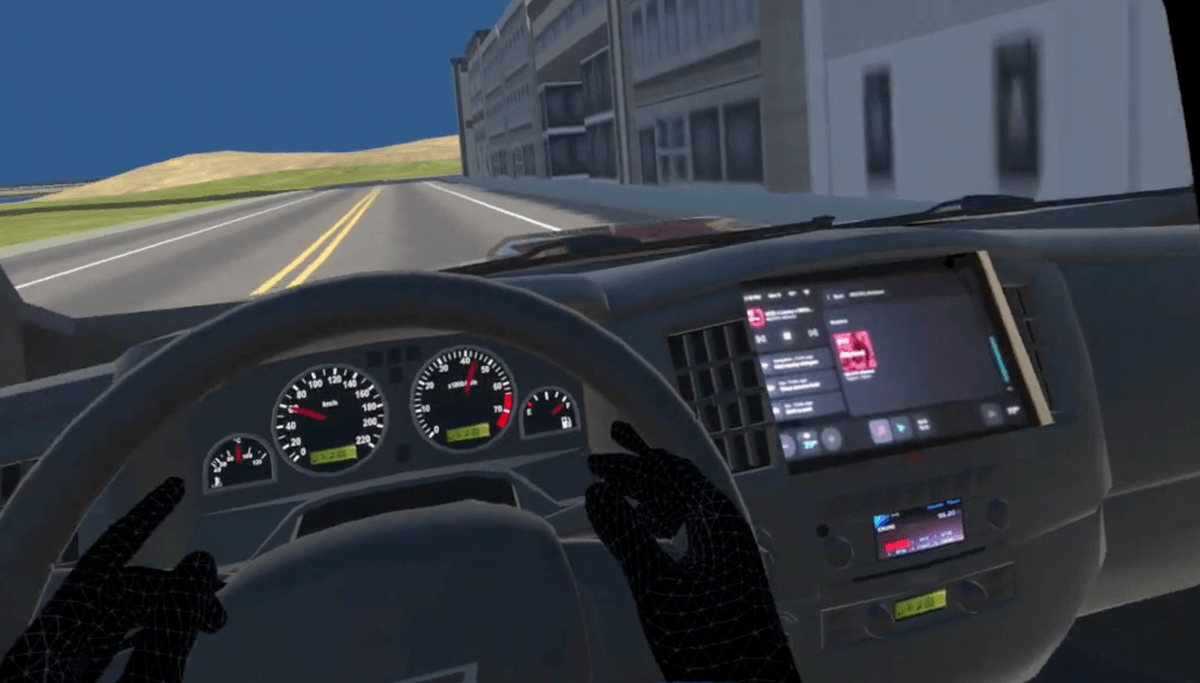

But once I got it right, the result was very interesting:

Is Mixed Reality a Good Way to Test In-Car Interfaces?

From the short time I spent testing it, I think mixed reality is promising for testing in-car interfaces. I was positively surprised by how real the interface looked in the interior while driving. The interaction feels close to real life. This unrefined virtual environment already gave me a totally different perspective than from testing the interface at my desk. However, there is a lot of room for improvement.

One major drawback is the calibration which slows down the process. This can be easily solved. Most racing simulation games have a 'reset camera' shortcut for correcting the camera position within the simulation. Another improvement would be to create an interface inside the virtual environment to calibrate the passthrough object to avoid having to hop in and out of the simulator.

These improvements would help, but there is still a hardware limit. The Quest 3's camera feed quality is not high enough. As you can see from the image below, there is a big difference between the resolution of the instrument cluster which is rendered by the Quest and the prototype on the tablet.

This is just a screenshot, so it's better when wearing the headset but still not good enough. Even in a well-lit room, it takes much more time and cognitive resources to focus the eyes and interpret the interface. Consequently, testing things like task-completion time or other distraction-related metrics won't yield accurate results. Therefore, it is not an accurate enough simulation of a real-world interaction because I spent significantly more time focused on the interface instead of the road.

It doesn't mean that the concept in its current form is useless. I will continue to use it because, despite its limitations, it gave me a great insight into how my prototype would work in a driving context with relatively low effort. It also won’t be long until there is an affordable headset on the market with the hardware quality of the Apple Vision Pro and the prototyping capability of the Quest. At that point, this will become a fantastic way to quickly test prototypes with low effort, especially when also taking things into account like built-in eye-tracking.

If you are interested in trying out my basic Unity environment, you can find the file with installation instructions on GitHub.

Get notified of new posts

Don't worry, I won't spam you with anything else