In the '90s, the average new car came with simple climate controls, a radio, and a CD player. That is very different from a new car today because we want to do a lot more non-driving-related activities behind the wheel. We listen to different music streaming services, podcasts, and audiobooks. We send messages, have phone calls, and try to find the best way to evade traffic on our navigation app. All of this distracts us from driving.

We can forbid carmakers to offer this inside the car, but it will probably result in even higher usage of smartphones while driving, ultimately leading to more driver distraction. How can carmakers design the in-car interaction to offer all these new services while limiting driver distraction? An often mentioned solution is voice interaction. It sounds great because drivers don't have to take their eyes off the road. But is voice interaction really a valid way to mitigate driver distraction? Let's find out!

How do we divide our attention?

Imagine you are driving home from work. As you approach an intersection with a red light, you receive a message on your phone. You take it out, read the message, and reply. In doing so, you forget to look at the road and run the red light. Why couldn't you multitask in this scenario?

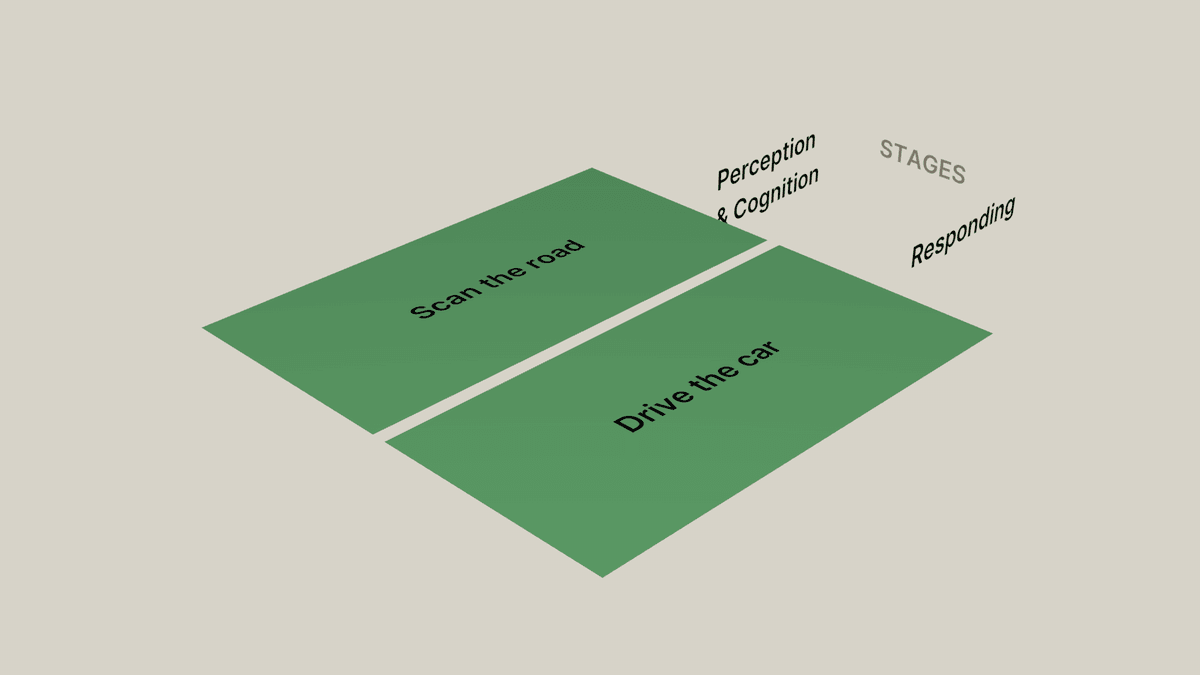

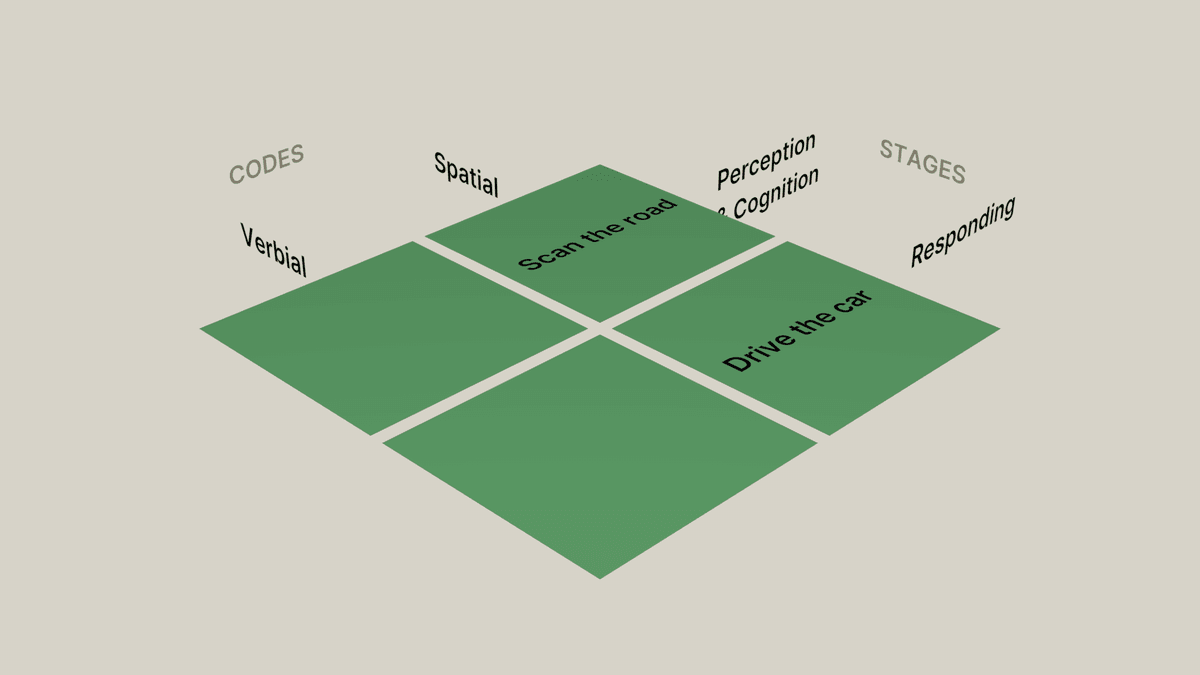

In 1984, Christopher D. Wickens created the Multiple Resource Theory, a model that explains how we divide our attention. Let's fill in this model to discover why we can't look at our phones and drive at the same time.

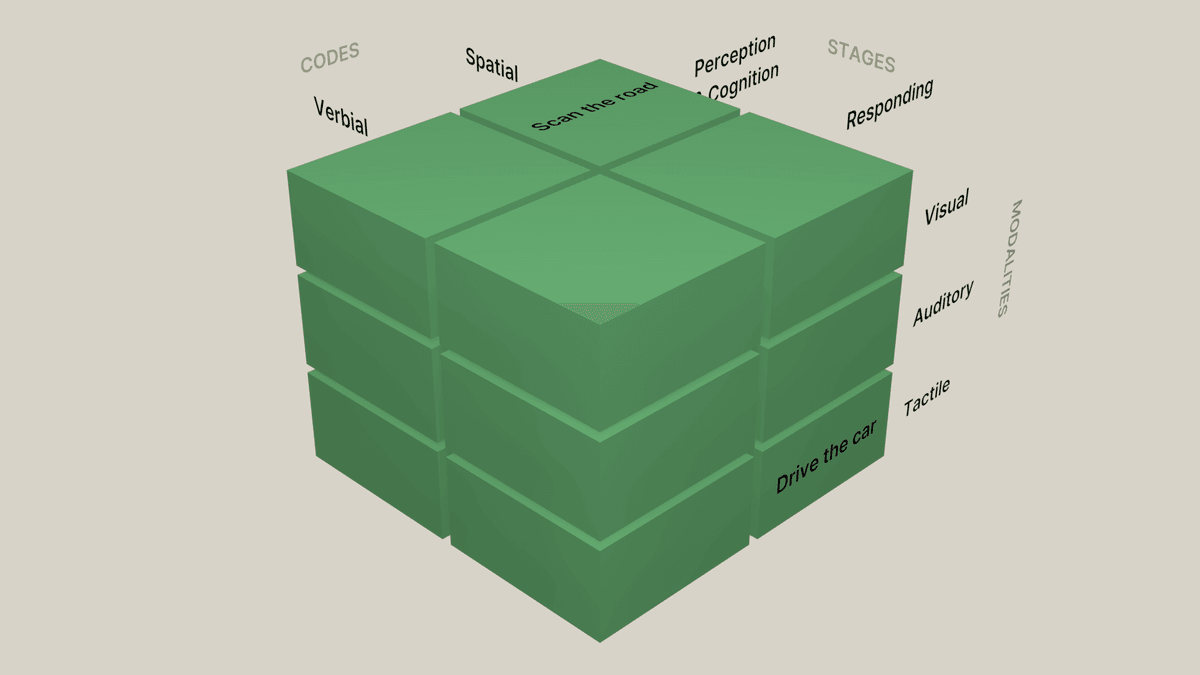

The Multiple Resource Model proposes that attention is divided into three different dimensions. The first dimension consists of two stages: perception & cognition, and responding. Adding the driving task to the model looks like this:

You perceive the road ahead and respond to that input by driving the car accordingly. The second dimension describes how input is processed in two different codes. Spatial activity uses different resources than verbal/linguistic activity. In our example, all the input is spatial activity.

The third dimension describes how attention can be divided into three modalities: visual, auditory, and tactile. Slowing down the car is a tactile modality, while scanning the road is a visual task.

The core idea behind the Multiple Resource Theory is that there is a greater interference between two tasks if they share the same stage, modality, or code. By mapping the time-shared events in the model, you can predict if they are disrupting.

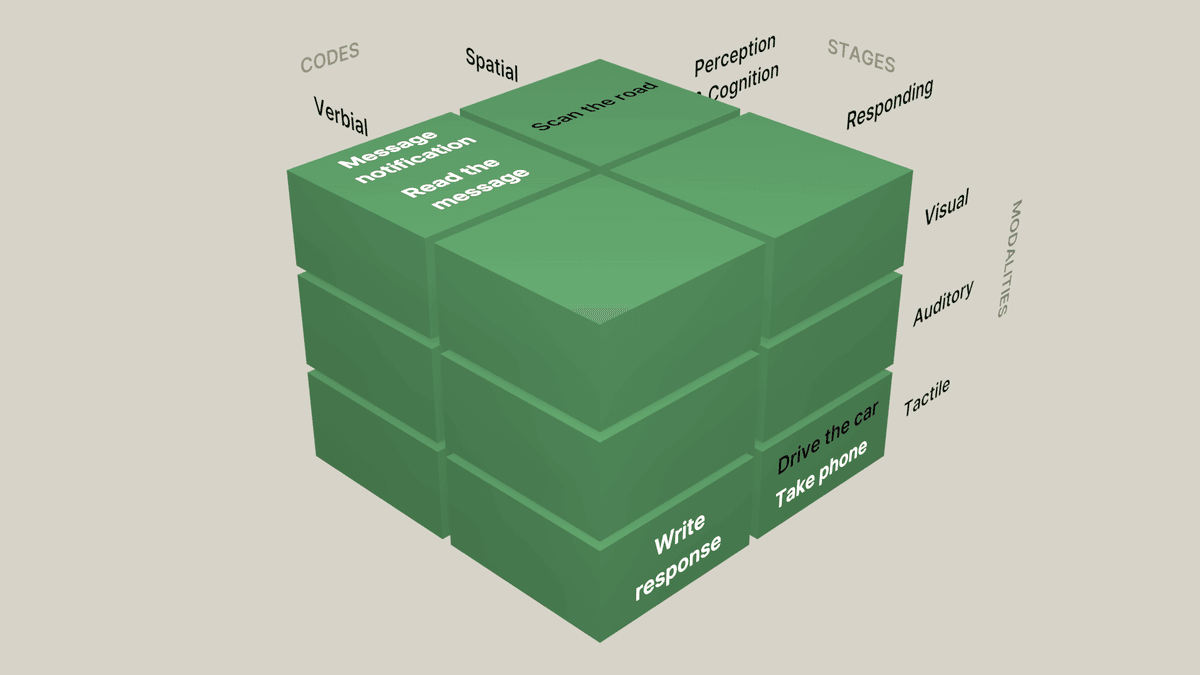

So let's go back to the example, and add the incoming message to the model.

Seeing the message notification and reading it interfered with the task of scanning the road. Also, taking the phone and responding to the message interfered with the task of driving the car. There is a lot of competition for attention in the visual and tactile modalities. As a result, we don't have enough cognitive resources to properly multitask which results in missing the red traffic light.

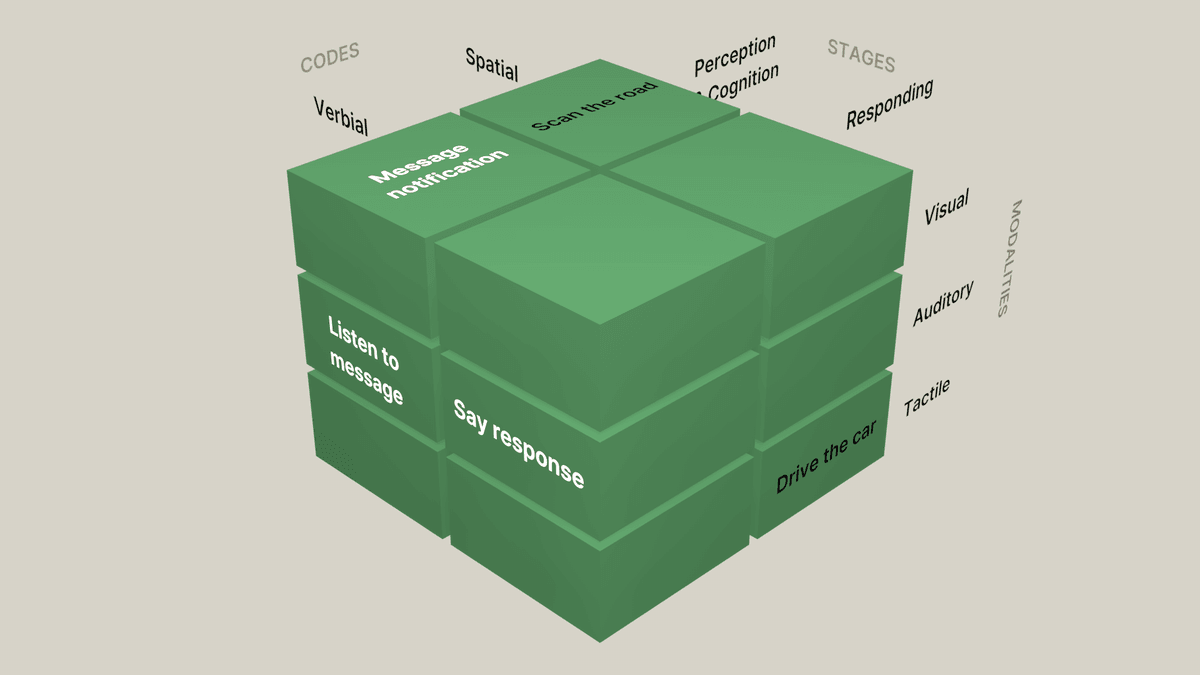

The auditory modality is not used in the example. According to the model, we can reduce the interference between tasks if we move some there. Let's imagine that we used a voice assistant, such as Siri, to listen and reply to the message. You can see that there is a lot less interference:

If this sounds too good to be true, it probably is. Since its conception in 1984, scientists have learned a lot more about how humans multitask and have challenged the theory. One of those scientists is Charles Spence. He found it limiting to look at each of the senses separately. The senses are, in fact, much more linked. For example, in a 2004 experiment, he showed that by changing the sound of the crunch of potato chips, people perceived the taste differently.

A wide body of research has since been released showing how, contrary to the Multiple Resource Theory, people typically combine the different streams of sensory information into one representation of the external world. This can be seen during multitasking events. The harder a task is in one modality, the more performance on another task will deteriorate due to capacity constraints. For example, aviation studies found that when a critical audio message comes in, pilots are so focused on it that they ignore the visual task of flying. In those cases, attention couldn't be shared by two modalities.

Another example is when people speak about visual topics, they engage their cognitive perceptual system that controls the visual domain. If you ask people driving a simulator if “the letters on a stop sign are white”, they show greater signs of visual distraction than when answering non-visual questions.

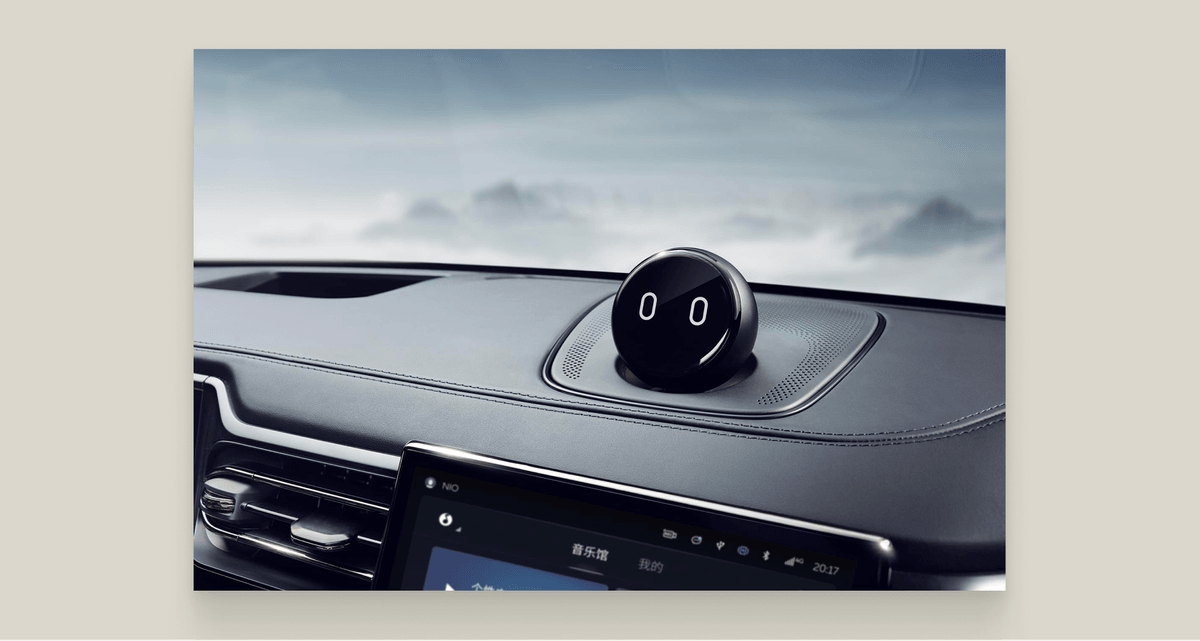

One key finding from crossmodal perception that relates to driving is the strong effect of space. People find it difficult to divide their attention spatially. This means that auditory information should come from the same location as visual. In the context of driving, this means in front of the driver. So instead of visual, auditory, and spatial information being totally separate, they have much more complex relationships according to the theory of crossmodal perception.

Is it better to use a voice interface over another modality, like touch input? It is hard to say based on the theoretical background. The risk is that if voice interaction requires a lot of cognitive resources, it will impact the driving task. So we have to dive into the practical research and discover what is the impact of high cognitive load on driving.

The impact of cognitive load on driving

Have you ever zoned out while driving and 'woken up' minutes later with no recollection of how you kept the car on the road? This phenomenon is called Highway Hypnosis and occurs when driving a very familiar stretch of road. Like a form of autopilot, your brain takes over tasks like lateral vehicle control and following distance so your mind can freely wander.

Interestingly, something similar happens when driving under high cognitive load. These highly practiced behaviors like keeping the car inside a lane become automated tasks over time. For example, solving a difficult math problem while driving, has little effect on automated driving behavior. In fact, some experiments show that cognitive load even has a positive effect on lane deviation.

That doesn't mean we should all drive under high cognitive load. An example of this is how speed is also an automated task. This is not necessarily a problem under normal conditions, but if external factors require the speed to be adjusted, like snow or rain, drivers under cognitive load will not adjust their speed. Furthermore, your gaze is more concentrated on the center of the road and you will have a reduction in peripheral event detection. As a result, under cognitive load, drivers either fail to notice or are too late to notice traffic signs or unexpected events like a car braking in front of them.

There is one crucial thing to note though. Even though cognitive load affects driving, there is no strong link between the effects of that and an increase in accidents.

In the past, if you wanted to do research on this, you had to rely on simulators or controlled real-world experiments. Recently, thanks to technological progress, several research institutes have created large datasets of 'naturalistic driving'. In the case of the Virginia Tech Transportation Institute, they asked 100 drivers to be monitored for two years with unobtrusive hardware.

Interestingly, analyses of these datasets have led to differences between the results of controlled experiments and real-world driving.

Is there a link between cognitive load and accident rate?

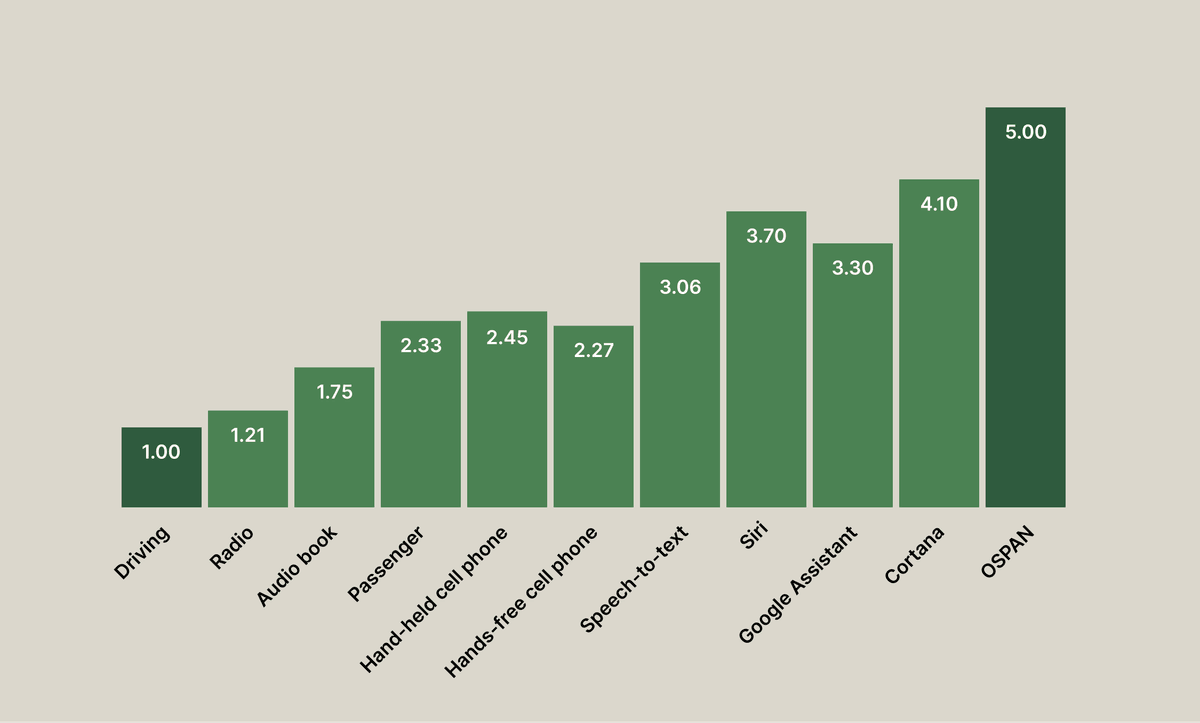

One often-cited study done by David Strayer and his colleagues at the University of Utah for the AAA Foundation for Traffic Safety is an example of such a debatable experiment. They created a controlled experiment testing various kinds of voice interaction in the car using a wide range of indicators, such as peripheral event detection, EEG activity, and vehicle control measures, to assess the level of cognitive load of different non-driving tasks. They mapped the results on their own scale where 1 is driving and 5 is the operation span task (a standardized measure of cognitive load).

According to their findings, there are three levels of cognitive load. The lowest level is listening to a book or radio, the second is talking to a passenger or having a phone conversation, and the highest level is caused by interacting with voice assistants.

Looking at the results, you would expect that talking on the phone increases crash risk as the cognitive load is higher. There also seems to be only a minor difference between a hand-held and hands-free phone conversation. Controlled experiments confirmed that the distraction is caused by the conversation and not the type of device used.

However, when looking at real-world data, there is a clear link between a reduction in accident rate and the use of hands-free devices. So even though there is a measurable increase in cognitive load from having a conversation, it does not result in more accidents. Similar results exist for having conversations with passengers.

Why does this difference exist? Probably because in controlled experiments, there are a lot of factors influencing the results. Participants are instructed to perform specific tasks at particular times. Drivers in the real world are more in control of their own cognitive workload. They are likely to interact in non-driving related tasks when less attention is required on the road.

Similarly, we can't look at the results for the voice assistant as saying anything about driver distraction. What this study shows is that interacting with a voice assistant leads to higher cognitive load than any other type of verbal interaction inside the car. Though this says more about the design and comfort of interacting with such technology than about driver distraction.

So what can we say about voice assistants?

Unfortunately, I couldn't find any naturalistic driving studies focused on voice interaction. The controlled experiments are also often lacking, especially considering the rate of improvement of voice assistants. It shows a big gap in research that is necessary for the car industry. Though, there are three areas of research around voice assistants that contribute to this discussion.

Visual distraction

Interacting with a voice assistant not only causes cognitive load but also leads to visual distraction. This is likely following the research on crossmodal perception showing strong links between visual and auditory modalities. Adding to that, some people tend to direct their gaze to the 'source' of the voice assistant (the center screen) when speaking, increasing the visual distraction.

Obviously, it does not lead to the same level of visual distraction as a touch interface. But it is something to be mindful of when designing a voice assistant for driving.

Error handling

The second factor that impacts voice interaction is error handling. In many experiments, interaction with a voice assistant leads to high cognitive load because of error handling.

For example, often mid-sentence, a situation occurs that requires the full attention of the driver. Voice assistants are not designed to wait for longer pauses or for filler words, leading to errors. We can only assume how that impacts driving safety though. There are examples of experiments in other domains where one task can completely take over another due to its criticality. It would be interesting to see if the same applies to voice assistants.

Perceived workload

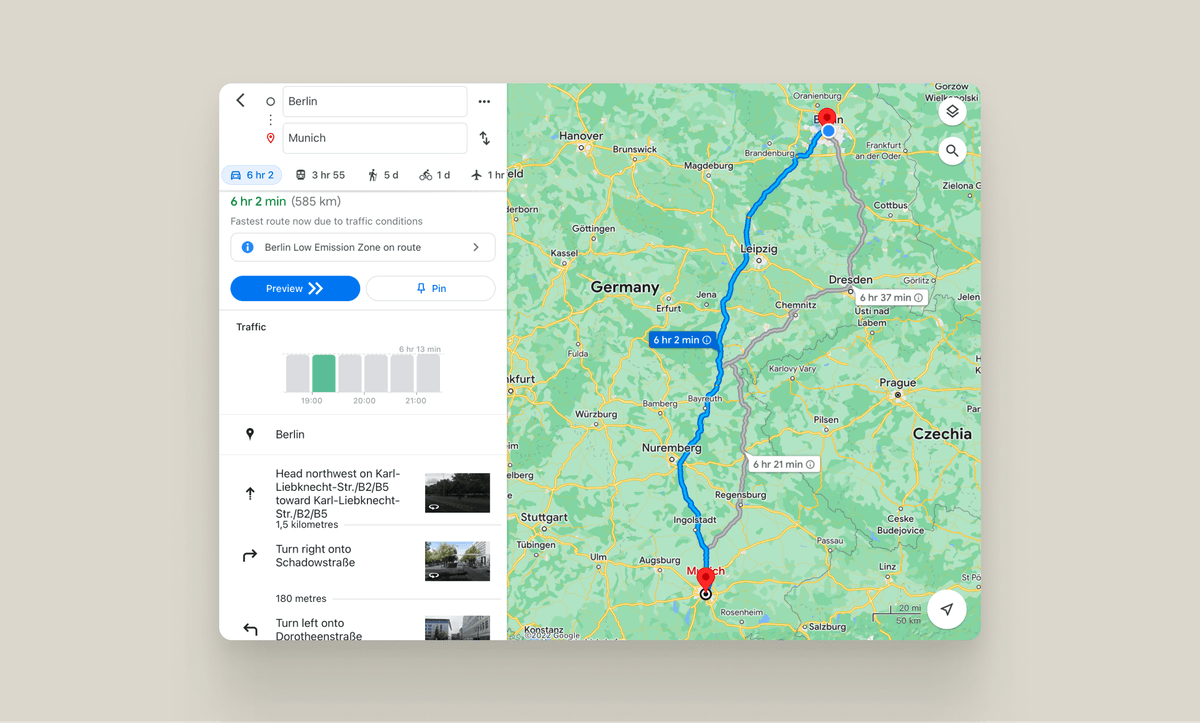

One key aspect to consider is that input mode preference depends on the use case. Take for example entering a destination in a navigation system. Typing it on a touch-based keyboard is visually really distracting and it takes longer than using a voice assistant. On the other hand, when Google Maps presents you with three route options, picking one is easier with a touch interface where you see the routes on a map and tap the one you want. Doing this via voice would be more complex.

This is reflected in experiments testing the perceived workload. The design of the voice assistant has therefore a big impact on adoption and user acceptance.

I started this post by mentioning the challenge that automotive user experience designers have. Drivers want to do more non-driving-related activities while driving but these are distracting. It is up to designers to mitigate this problem in the best way possible.

Voice interaction sounds great because the driver's eyes remain focused on the road while interacting with the voice assistant. Even though there seems to be an impact on driving by high cognitive load, there is no link between that and accident rate. Frustratingly, there is no naturalistic driving research on in-car voice interaction which means we can not say much about distraction caused by interacting with a voice assistant.

If, after reading this (very long) post, you are disappointed with this conclusion, you are not alone. After 3 months of research, it is annoying to discover this. I'm not only doing this research for my blog, but also because I'm using it in my professional life as a designer in the car industry. So having read 4 books and more than 50 papers, I can share how I look at the topic now and what decisions I will take for my own work.

If you are a designer in the car industry, you should take voice assistants seriously. Relying on multiple modalities will increase the multitasking capability of a driver. I don't look at a voice assistant as a nice addition to the interface, but as an integral part of it. Voice assistants should be included from the start of the design process.

Each in-car interaction has a preferred input mode, and the interface should nudge the driver to use it. For example, entering a destination in a navigation system is likely better by voice. However, choosing one of the suggested routes is probably better by touch. Figuring this out is difficult and influenced by user acceptance. If voice interaction is the preferred input method but drivers don't like it, it won't be adopted. That is why the design and quality of the voice assistant matter a lot.

There is a lot more practical information about designing voice interaction from my research. I'm currently in the process of turning that into a set of design guidelines which I will share in my next post.

If you'd like to be notified when I post this article, leave your email address below. Until then, if you know of any relevant research that I have missed, reach out!

Get notified of new posts

Don't worry, I won't spam you with anything else